Why GraphQL Beats REST for Magento 2 B2B Speed

See why GraphQL is overtaking REST for Magento 2 B2B speed. Learn patterns, caching, and a migration plan to cut latency and lift conversion.

Personalization is now a baseline expectation in online retail. Shoppers want products that fit their needs in the moment, not generic lists. AI makes that possible at scale. The shift is from static segments to real-time, one-to-one experiences that adapt to context, inventory, and business goals. When you do it well, you lift conversion, average order value, and retention. When you miss, you add noise and erode trust. This post breaks down how hyper-personalized recommendations work, the architecture behind them, the models that matter, and the metrics and guardrails that keep systems reliable. You will also get a pragmatic roadmap to start small and scale fast.

Hyper-personalization tailors content for each user and session using behavior, context, and intent signals. It goes beyond “people like you bought X” to consider where the user came from, what they just did, and what the business is trying to optimize. It also respects constraints like stock levels, pricing rules, and brand safety. The goal is not only to predict relevance, but to decide the best next action in real time.

The result is a dynamic, session-aware experience across homepage, PDP, cart, email, and on-site search.

Several shifts make hyper-personalization practical today. First, first-party event data is richer and easier to capture across web and apps. Second, vector search and efficient embedding models allow fast similarity search at scale. Third, MLOps platforms make it feasible to version data, train models continuously, and ship updates safely. Finally, privacy changes push teams to invest in first-party data and consented use, raising the bar for governance and measurement.

Together, these capabilities enable real-time decisioning that balances user value with operational and compliance requirements.

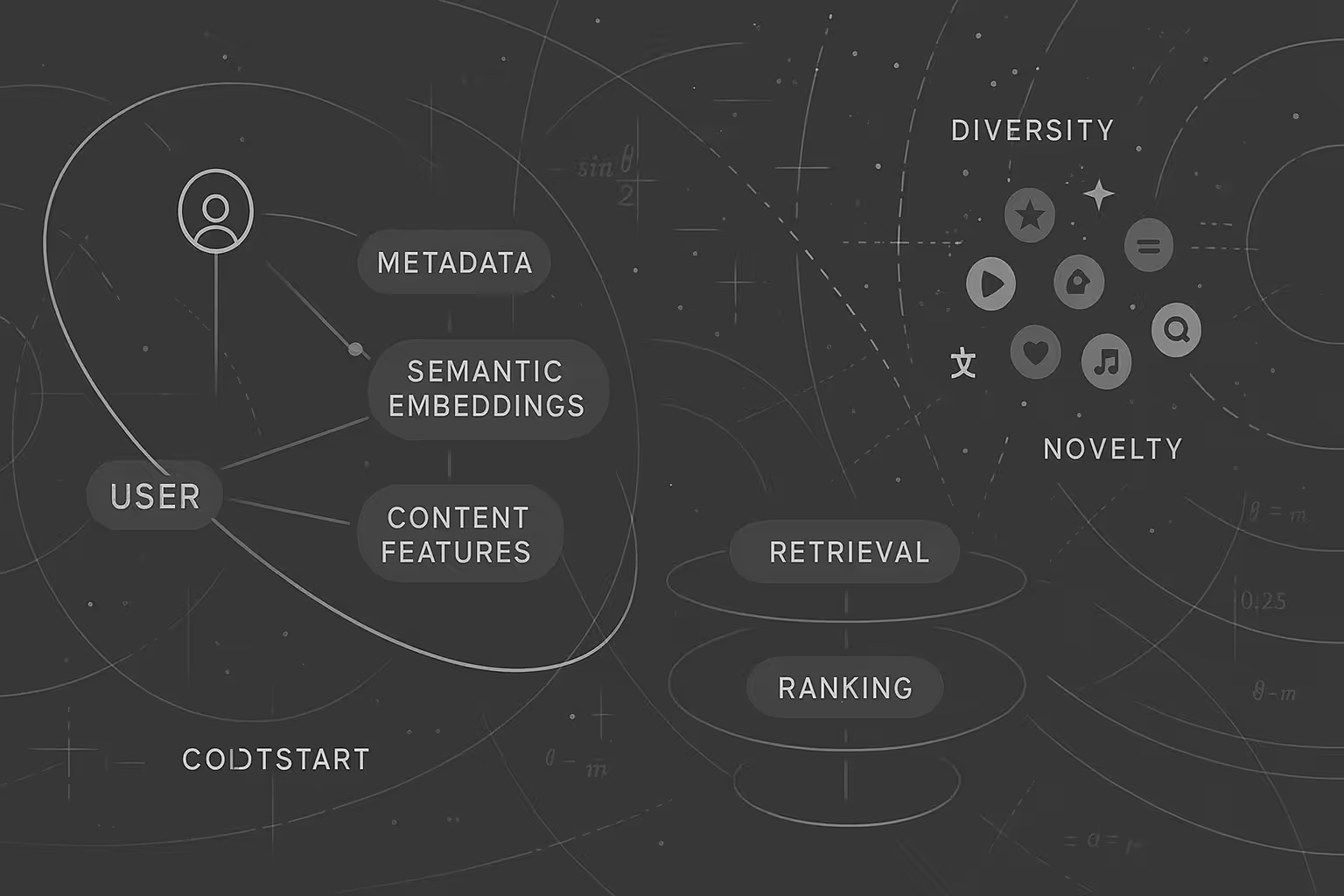

Winning systems share a common blueprint that turns raw events into low-latency decisions:

Latency budgets typically target under 150 ms for retrieval plus ranking. Caching and precomputation reduce tail latency while keeping content fresh.

No single model wins everywhere; most production systems use a multi-stage stack. Collaborative filtering and matrix factorization capture user–item affinities when you have interaction history. Two-tower neural recommenders learn embeddings for users and items and scale well for retrieval. Sequence models (e.g., transformer-based) learn short-term intent from recent actions. Contextual bandits balance exploration and exploitation in real time. Reinforcement learning can optimize long-term value but needs careful reward design and guardrails.

Start with a strong baseline (e.g., matrix factorization or two-tower retrieval) and layer complexity only when metrics justify it.

Real-time delivery is as much a systems problem as a modeling one. Define strict latency goals, then design for them:

Keep response payloads lean, and enforce SLAs with circuit breakers to protect page performance.

Measure what the business values and prove causality:

Governance keeps systems trustworthy. Add explainability for sensitive placements. Set fairness and diversity constraints to prevent narrow loops. Minimize and protect PII, enforce consent by use case, and log model decisions for audits. Monitor data drift, feature freshness, and outlier spikes; auto-roll back when quality degrades.

You do not need to build everything on day one. Sequence your investments:

This staged approach compounds results while reducing risk and change-management load.

If you are ready to validate your roadmap or need help standing up real-time recommendations, Encomage can support strategy, architecture, and pilot delivery. Let us help you de-risk the first wins and scale what works.

Let’s build something powerful together - with AI and strategy.

.avif)